Finding ways today to distil the knowledge that makes artificial intelligence so valuable will ensure we have a tomorrow where it is available to those who need it most - and at less cost to the environment.

Dr. Anna Bosman and Heinrich van Deventer, Department of Computer Science

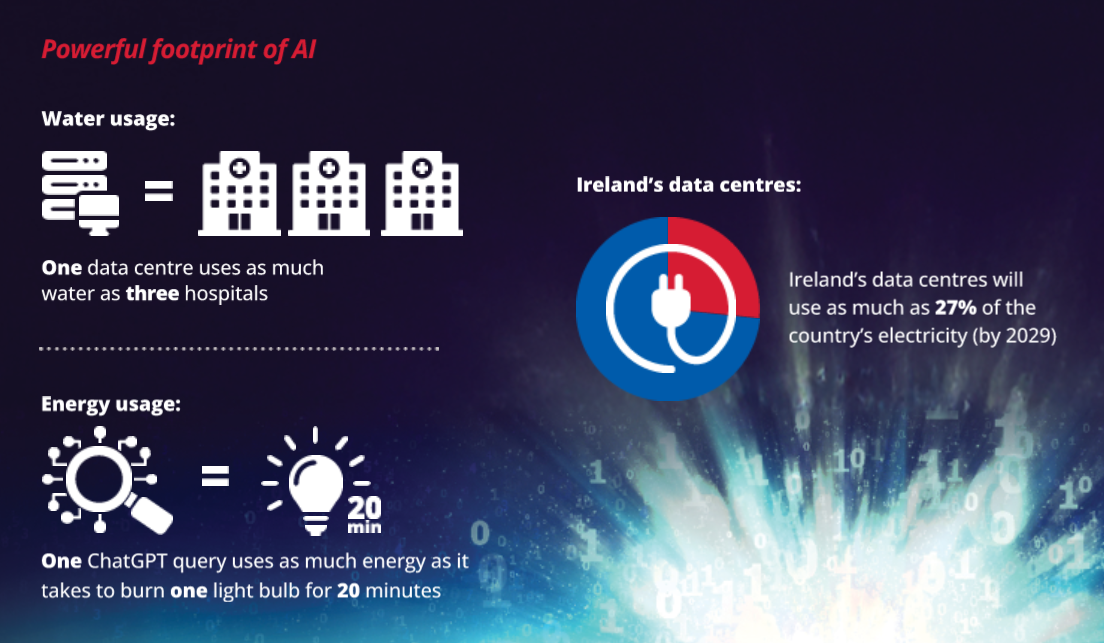

A single query to ChatGPT uses as much electricity as burning a light bulb for about 20 minutes. [1] Multiply that by the millions of requests that this artificial intelligence (AI) chatbot receives each day, and the environmental impact is ominous.

The Computational Intelligence Research Group (CIRG), led by Dr Anna Bosman of the University of Pretoria’s (UP) Department of Computer Science, is searching for ways to reduce the energy consumption of artificial neural networks without sacrificing their performance.

“If we want AI to be sustainable, we must make it compressible,” she says.

Artificial neural networks (ANNs) can automatically extract patterns from data through a process called training or machine learning (ML). While ‘artificial’, ANNs were inspired by the human brain’s ability to process information. ML allowed computers to perform tasks such as image recognition, natural language processing and decision-making without being explicitly programmed. However, the size and complexity of the ANNs have grown exponentially over the past decade, and that’s not always good news.

“State-of-the-art ANNs often have billions of parameters, demanding massive computational power for training and deployment,” Dr Bosman explains. “This rapid increase in model size has raised significant concerns about their accessibility and environmental impact. The data centres built in Ireland, which are crucial for the modern ML infrastructure, are projected to consume 27% of the country’s electricity by 2029. An average data centre is estimated to use as much water as three average-sized hospitals. [2] Using large ANNs is costly and has a significant environmental footprint.” [3]

Another downside of large ANNs is that they cannot be deployed in resource-constrained environments. Not everybody has access to a Google data centre. As such, impressive progress in AI remains inaccessible to those who may need it most: doctors in rural areas, small-scale farmers and nature conservationists.

“Energy efficiency can be achieved in two ways: by compressing large models to reduce their size or by designing more expressive ANN architectures requiring fewer parameters to achieve comparable results to standard ANNs,” Dr Bosman says.

A promising avenue for green ML is knowledge distillation (KD), a method of transferring knowledge from a large ’teacher’ ANN to a smaller ‘student’ ANN to preserve performance in a more compact form; this is done by mimicking the information representation of the teacher. Using this technique, Dr Anna Bosman and collaborators achieved a tenfold reduction in the size of a pest detection model for a farming project in Rwanda. Another research project is underway where KD methods are applied directly to the ANN parameters rather than the outputs they produce.

Heinrich van Deventer, a PhD student at CIRG and recipient of a Google PhD Fellowship, is using his background in theoretical physics to develop radically new compact ANN architectures (or neural operators) from the ground up. The trick is to treat inputs as continuous functions similar to analogue computing, rather than discrete or independent variables.

“Such compact ANN models may become the building blocks for the next generation of AI that is accessible to all, and mindful of the world”, he says.

Machine-learning models are larger than ever, and yield results that have an impact on every aspect of our lives. However, creating and using such bloated models comes at a price: they are inaccessible for most organisations and people, and the associated energy demands and environmental impact are significant concerns. Finding ways to distil the knowledge of these artificial neural networks by developing new compact neural operators from the ground up is the seedbed of future AI innovation.